ECCE is only available prebuilt for 64 bit OS. You will NOT be able to install the binaries from EMSL on a 32 bit OS in your virtual machine.

!NOTE2! I use localhost in the set-up below. Be aware that this will block outside access: http://www.nwchem-sw.org/index.php/Special:AWCforum/st/id858/#post_3178

This post shows how to

1. compile ECCE for a 32 bit system

and

2. install the pre-built EMSL 64 bit binaries for a 64 bit system.

The reason why I show both is that if you are running a 32 bit host system you might not be able to run a 64 bit client OS.

!NOTE!

This is essentially an update of an older post (http://verahill.blogspot.com.au/2012/06/ecce-in-virtual-machine-step-by-step.html) which describes how to install ECCE 6.3 on Debian 6.

Part of the reason for updating is this comment: http://verahill.blogspot.com.au/2012/06/ecce-in-virtual-machine-step-by-step.html?showComment=1369743102385#c8435880599103089732

If you are running a debian or redhat based linux distribution you should be able to install ecce natively without issue (e.g. http://verahill.blogspot.com.au/2013/01/325-compiling-ecce-64-on-debian-testing.html).

However, if you are using Windows or OS X you will probably want to install ecce inside a virtual machine, hence this post:

0. Install virtualbox

How you install virtualbox depends on your host system. I'm running debian wheezy AMD 64, so I did

cd ~/Downloads wget http://download.virtualbox.org/virtualbox/4.2.12/virtualbox-4.2_4.2.12-84980~Debian~wheezy_amd64.deb sudo dpkg -i virtualbox-4.2_4.2.12-84980~Debian~wheezy_amd64.deb

Note that you are in no way obliged to download the Oracle packaged version of virtualbox. I'm using it because I've had issues with the debian packages in the past when using kernels that are very new (and that I've compiled myself). See e.g.http://verahill.blogspot.com.au/2013/05/419-talking-to-myself-in-public-dkms.html

See here for other versions of virtualbox: https://www.virtualbox.org/wiki/Linux_Downloads

1. Download the Debian 7 installation CD

If you are on a unix-like system you should be able to do something along the lines of

mkdir ~/tmp cd ~/tmp wget http://cdimage.debian.org/debian-cd/7.0.0/i386/iso-cd/debian-7.0.0-i386-netinst.isoto download the 32 bit iso and

wget http://cdimage.debian.org/debian-cd/7.0.0/amd64/iso-cd/debian-7.0.0-amd64-netinst.isoto download the 64 bit version. Please read the introduction to this post to learn about the consequences of installing a 32 bit system.

2.Set up the virtual machine

(in this example I'm using the i386 version of debian, but the steps are identical for the amd64 version)

Start virtualbox and click on New:

|

| Mount the CD |

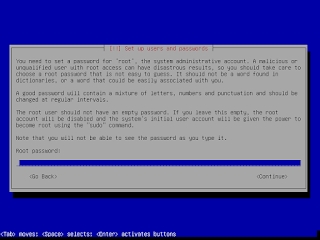

3. Install Debian 7 on the virtual machine

Click Start and the installation should start. (the steps are the same for the i386 and the amd64 versions)

Debian 7 (wheezy) is now installed. Log in.

At this point you can install whatever you want. I suggest installing the

- LXDE desktop environment (I know that it's a tautology).

- openjdk-7-jdk (you might be able to get away with -jre)

Reboot.

4a. 32 bit Virtual machine: Compile ECCE v 6.4

Note: you might have to go to http://ecce.emsl.pnl.gov/using/download.shtml and download the source in a browser if wget doesn't work.

mkdir ~/tmp cd ~/tmp wget http://ecce.emsl.pnl.gov/cgi-bin/help/nwecce.pl/ecce-v6.4-src.tar.bz2 tar xvf ecce-v6.4-src.tar.bz2 sudo apt-get install bzip2 build-essential autoconf libtool ant pkg-config gtk+-2.0-dev libxt-dev csh gfortran openjdk-6-jdk python-dev libjpeg-dev imagemagick xterm cd ecce-v6.4/ export ECCE_HOME=`pwd` cd build/ ./build_ecce

At this point you'll be going through the same steps as shown in this post: http://verahill.blogspot.com.au/2013/01/325-compiling-ecce-64-on-debian-testing.html (yesterday's testing is today's stable)

Checking prerequisites for building ECCE... If any of the following tools aren't found or aren't the right version, hit./build_ecce-c at the prompt and either find or install the tool before re-running this script. The whereis command is useful for finding tools not in your path. Found gcc in: /usr/bin/gcc ECCE requires gcc 3.2.x or 4.x.x This version: gcc (Debian 4.7.2-5) 4.7.2 Hit return if this gcc is OK... [..] This works fine unless your site needs support for multiple platforms. Finished checking prerequisites for building ECCE. Do you want to skip these checks for future build_ecce invocations (y/n)? y Xerxes built./build_ecceMesa OpenGL built./build_eccewxWidgets built./build_eccewxPython built./build_ecceApache HTTP server built./build_ecceECCE built and distribution created in /home/verahill/tmp/ecce-v6.4cd ../ ./install_ecce.v6.4.csh

and continue to section 5.

4b. 64 bit Virtual machine: Download ECCE v 6.4

mkdir ~/tmp cd ~/tmp wget http://ecce.emsl.pnl.gov/cgi-bin/help/nwecce.pl/install_ecce.v6.4.rhel5-gcc4.1.2-m64.csh sudo apt-get install csh csh install_ecce.v6.4.rhel5-gcc4.1.2-m64.csh

and continue to section 5.

5. Install ECCE

Main ECCE installation menu =========================== 1) Help on main menu options 2) Prerequisite software check 3) Full install 4) Full upgrade 5) Application software install 6) Application software upgrade 7) Server install 8) Server upgrade IMPORTANT: If you are uncertain about any aspect of installing or running ECCE at your site, please refer to the detailed ECCE Installation and Administration Guide at http://ecce.pnl.gov/docs/installation/2864B-Installation.pdf Hit at prompts to accept the default value in brackets. Selection: [1] 3 Host name: [eccehost] localhost Application installation directory: [/home/verahill/tmp/ecce-v6.4/ecce-v6.4/apps] /home/verahill/.ecce/apps Server installation directory: [/home/verahill/.ecce/server] ECCE v6.4 will be installed using the settings: Installation type: [full install] Host name: [localhost] Application installation directory: [/home/verahill/.ecce/apps] Server installation directory: [/home/verahill/.ecce/server] Are these choices correct (yes/no/quit)? [yes] Installing ECCE application software in /home/verahill/.ecce/apps... Extracting application distribution... Extracting NWChem binary distribution... Extracting NWChem common distribution... Extracting client WebHelp distribution... Configuring application software... Configuring NWChem... Installing ECCE server in /home/verahill/.ecce/server... Extracting data server in /home/verahill/.ecce/server/httpd... Extracting data libraries in /home/verahill/.ecce/server/data... Extracting Java Messaging Server in /home/verahill/.ecce/server/activemq... Configuring ECCE server... ECCE installation succeeded. *************************************************************** !! You MUST perform the following steps in order to use ECCE !! -- Unless only the user 'verahill' will be running ECCE, start the ECCE server as 'verahill' with: /home/verahill/.ecce/server/ecce-admin/start_ecce_server -- To register machines to run computational codes, please see the installation and compute resource registration manuals at http://ecce.pnl.gov/using/installguide.shtml -- Before running ECCE each user must source an environment setup script. For csh/tcsh users add this to ~/.cshrc: if ( -e /home/verahill/.ecce/apps/scripts/runtime_setup ) then source /home/verahill/.ecce/apps/scripts/runtime_setup endif For sh/bash users, add this to ~/.profile or ~/.bashrc: if [ -e /home/verahill/.ecce/apps/scripts/runtime_setup.sh ]; then . /home/verahill/.ecce/apps/scripts/runtime_setup.sh fi ***************************************************************

echo 'export ECCE_HOME=/home/verahill/.ecce/apps' >> ~/.bashrc echo 'PATH=$PATH:/home/verahill/.ecce/server/ecce-admin/:/home/verahill/.ecce/apps/scripts/' >> ~/.bashrc echo source ~/.bashrc start_ecce_serverecce/home/verahill/.ecce/server/httpd/bin/apachectl start: httpd started [1] 4137 INFO BrokerService - ActiveMQ 5.1.0 JMS Message Broker (localhost) is starting INFO BrokerService - ActiveMQ JMS Message Broker (localhost, ID:ecce64bit-35450-1369895708602-0:0) started

Note that this is just the beginning. You should now compile nwchem and set up ecce to work with nwchem either locally or remotely.

This post shows one type of configuration: http://verahill.blogspot.com.au/2012/06/ecce-in-virtual-machine-step-by-step.html

But read the documentation and search this site and you'll find more examples.