Update 3: 9 May 2013. Fixed a couple of mistakes e.g. related to mpi. I also switched from ATLAS to acml -- when I build with ATLAS a lot of the example inputs do not converge.

Update 2: Pietro (see posts below) identified some odd behaviour when running test exam44 in which the scf failed to converge. The (temporary) fix for that has been included in the instructions below (change line 1664 in the file 'comp') -- most likely it's only a single file which needs to be compiled with -O0, but it will take a while to identify which one that is. Having to use -O0 on a performance critical piece of software is obviously unfortunate.

Update:

I've done this on ROCKS 5.4.3/ Centos 5.6 as well. Be aware that because of the ancient version of gfortran (4.1.2) on ROCKS there will be some limitations:

Alas, your version of gfortran does not support REAL*16,

so relativistic integrals cannot use quadruple precision.

Other than this, everything will work properly.

Other than that, follow the instructions below (including editing lked)

Original post:

Solvation energies using implicit solvation is a tough nut to crack. I like working with NWChem, but there's only one solvation model (COSMO) implemented, it has had a history of giving results which are wildly different (

>20 kcal/mol! It's fixed now -- using b3lyp/6-311++g** with the cosmo parameters in that post I got 63.68 kcal/mol for Cl-) from that of other software packages (partly due to a bug which was fixed in 2011), and I'm still not sure how to properly use the COSMO module (is rsolv 0 a reasonable value?). Obviously, my own unfamiliarity with the method is another issue, but that's where the idea of sane defaults come in. So, time to test and compare with other models. Reading

Cramer, C. J.; Truhlar, D. G. A Acc. Chem. Res. 2008, 41, 760–768 got me interested in GAMESS US again.

Gaussian is not really an attractive option for me anymore for performance reasons (caveat: as seen by me on my particular systems using precompiled binaries). Free (source code + cost) is obviously also always attractive. Being a linux sort of person also plays into it.

So, here's how to get your cluster set up for gamess US:

1. Go to

http://www.msg.chem.iastate.edu/GAMES S/download/register/

Select agree, then pick your version -- in my case

GAMESS version May 1, 2012 R1 for 64 bit (x86_64 compatible) under Linux with gnu compilers

Once you've completed your order you're told you may have to wait for up to a week before your registration is approved, but I got approved in less than 24 hours.

[2. Register for

GAMESSPLUS at

http://comp.chem.umn.edu/license/form-user.html

Again, it may take a little while to get approved -- in my case it was less than 24 hours. Also, it seems that you don't need a separate GAMESSPLUS anymore]

3. Download gamess-current.tar.gz as per the instructions and put it in

/opt/gamess (once you've created the folder)

4. If you're using AMD you're in luck -- set up acml on your system. In my case I put everything in /opt/acml/acml5.2.0

I've had bad luck with ATLAS.

5. Compile

sudo apt-get install build-essential gfortran openmpi-bin libopenmpi-dev libboost-all-dev

sudo mkdir /opt/gamess

sudo chown $USER /opt/gamess

cd /opt/gamess

tar xvf gamess-current.tar.gz

cd gamess/

You're now ready to autoconfigure.

The lengthy autoconfigure.

Note that

* the location of your openmpi libs may vary -- the debian libs are put in /usr/bin/openmpi/lib by default, but

I'm using my own compiled version which I've put in /opt/openmpi

* gamess is linked against the static libraries by default, so if you compiled atlas

as is described elsewhere on this blog, you'll be fine.

./config

This script asks a few questions, depending on your computer system,

to set up compiler names, libraries, message passing libraries,

and so forth.

You can quit at any time by pressing control-C, and then .

Please open a second window by logging into your target machine,

in case this script asks you to 'type' a command to learn something

about your system software situation. All such extra questions will

use the word 'type' to indicate it is a command for the other window.

After the new window is open, please hit to go on.

GAMESS can compile on the following 32 bit or 64 bit machines:

axp64 - Alpha chip, native compiler, running Tru64 or Linux

cray-xt - Cray's massively parallel system, running CNL

hpux32 - HP PA-RISC chips (old models only), running HP-UX

hpux64 - HP Intel or PA-RISC chips, running HP-UX

ibm32 - IBM (old models only), running AIX

ibm64 - IBM, Power3 chip or newer, running AIX or Linux

ibm64-sp - IBM SP parallel system, running AIX

ibm-bg - IBM Blue Gene (P or L model), these are 32 bit systems

linux32 - Linux (any 32 bit distribution), for x86 (old systems only)

linux64 - Linux (any 64 bit distribution), for x86_64 or ia64 chips

AMD/Intel chip Linux machines are sold by many companies

mac32 - Apple Mac, any chip, running OS X 10.4 or older

mac64 - Apple Mac, any chip, running OS X 10.5 or newer

sgi32 - Silicon Graphics Inc., MIPS chip only, running Irix

sgi64 - Silicon Graphics Inc., MIPS chip only, running Irix

sun32 - Sun ultraSPARC chips (old models only), running Solaris

sun64 - Sun ultraSPARC or Opteron chips, running Solaris

win32 - Windows 32-bit (Windows XP, Vista, 7, Compute Cluster, HPC Edition)

win64 - Windows 64-bit (Windows XP, Vista, 7, Compute Cluster, HPC Edition)

winazure - Windows Azure Cloud Platform running Windows 64-bit

type 'uname -a' to partially clarify your computer's flavor.

please enter your target machine name: linux64

Where is the GAMESS software on your system?

A typical response might be /u1/mike/gamess,

most probably the correct answer is /home/me/tmp/gamess

GAMESS directory? [/opt/gamess] /opt/gamess

Setting up GAMESS compile and link for GMS_TARGET=linux64

GAMESS software is located at GMS_PATH=/home/me/tmp/gamess

Please provide the name of the build locaation.

This may be the same location as the GAMESS directory.

GAMESS build directory? [/opt/gamess] /opt/gamess

Please provide a version number for the GAMESS executable.

This will be used as the middle part of the binary's name,

for example: gamess.00.x

Version? [00] 12r1

Linux offers many choices for FORTRAN compilers, including the GNU

compiler set ('g77' in old versions of Linux, or 'gfortran' in

current versions), which are included for free in Unix distributions.

There are also commercial compilers, namely Intel's 'ifort',

Portland Group's 'pgfortran', and Pathscale's 'pathf90'. The last

two are not common, and aren't as well tested as the others.

type 'rpm -aq | grep gcc' to check on all GNU compilers, including gcc

type 'which gfortran' to look for GNU's gfortran (a very good choice),

type 'which g77' to look for GNU's g77,

type 'which ifort' to look for Intel's compiler,

type 'which pgfortran' to look for Portland Group's compiler,

type 'which pathf90' to look for Pathscale's compiler.

Please enter your choice of FORTRAN: gfortran

gfortran is very robust, so this is a wise choice.

Please type 'gfortran -dumpversion' or else 'gfortran -v' to

detect the version number of your gfortran.

This reply should be a string with at least two decimal points,

such as 4.1.2 or 4.6.1, or maybe even 4.4.2-12.

The reply may be labeled as a 'gcc' version,

but it is really your gfortran version.

Please enter only the first decimal place, such as 4.1 or 4.6:

4.6

Good, the newest gfortran can compile REAL*16 data type.

hit <return> to continue to the math library setup.

Linux distributions do not include a standard math library.

There are several reasonable add-on library choices,

MKL from Intel for 32 or 64 bit Linux (very fast)

ACML from AMD for 32 or 64 bit Linux (free)

ATLAS from www.rpmfind.net for 32 or 64 bit Linux (free)

and one very unreasonable option, namely 'none', which will use

some slow FORTRAN routines supplied with GAMESS. Choosing 'none'

will run MP2 jobs 2x slower, or CCSD(T) jobs 5x slower.

Some typical places (but not the only ones) to find math libraries are

Type 'ls /opt/intel/mkl' to look for MKL

Type 'ls /opt/intel/Compiler/mkl' to look for MKL

Type 'ls /opt/intel/composerxe/mkl' to look for MKL

Type 'ls -d /opt/acml*' to look for ACML

Type 'ls -d /usr/local/acml*' to look for ACML

Type 'ls /usr/lib64/atlas' to look for Atlas

Enter your choice of 'mkl' or 'atlas' or 'acml' or 'none': acml

Type 'ls -d /opt/acml*' or 'ls -d /usr/local/acml*'

and note the the full path, which includes a version number.

enter this full pathname: /opt/acml/acml5.2.0

Math library 'acml' will be taken from /opt/acml/acml5.2.0/gfortran64_int64/lib

please hit <return> to compile the GAMESS source code activator

gfortran -o /home/me/tmp/gamess/build/tools/actvte.x actvte.f

unset echo

Source code activator was successfully compiled.

please hit to set up your network for Linux clusters.

If you have a slow network, like Gigabit Ethernet (GE), or

if you have so few nodes you won't run extensively in parallel, or

if you have no MPI library installed, or

if you want a fail-safe compile/link and easy execution,

choose 'sockets'

to use good old reliable standard TCP/IP networking.

If you have an expensive but fast network like Infiniband (IB), and

if you have an MPI library correctly installed,

choose 'mpi'.

communication library ('sockets' or 'mpi')? mpi

The MPI libraries which work well on linux64/Infiniband are

Intel's MPI (impi)

MVAPICH2

SGI's mpt from ProPack, on Altix/ICE systems

Other libraries may work, please see 'readme.ddi' for info.

The choices listed above will compile and link easily,

and are known to run correctly and efficiently.

Enter 'sockets' if you just changed your mind about trying MPI.

Enter MPI library (impi, mvapich2, mpt, sockets): openmpi

MPI can be installed in many places, so let's find openmpi.

The person who installed your MPI can tell you where it really is.

impi is probably located at a directory like

/opt/intel/impi/3.2

/opt/intel/impi/4.0.1.007

/opt/intel/impi/4.0.2.003

include iMPI's version numbers in your reply below.

mvapich2 could be almost anywhere, perhaps some directory like

/usr/mpi/gcc/mvapich2-1.6

openmpi could be almost anywhere, perhaps some directory like

/usr/mpi/openmpi-1.4.3

mpt is probably located at a directory like

/opt/sgi/mpt/mpt-1.26

Please enter your openmpi's location: /opt/openmpi/1.6

Your configuration for GAMESS compilation is now in

/home/me/tmp/gamess/build/install.info

Now, please follow the directions in

/opt/gamess/machines/readme.unix

I next did this:

cd /opt/gamess/ddi

./compddi

cd ../

Edit the file 'comp' and change it from

1664 set OPT='-O2'

to

1664 set OPT='-O0'

or test case

exam44.inp in

tests/standard will fail due to lack of SCF convergence. (I've tried -O1 as well with no luck)

Continue your compilation:

./compall

Running 'compall' reads "install.info" which I include below:

#!/bin/csh

# compilation configuration for GAMESS

# generated on beryllium

# generated at Friday 21 September 08:48:09 EST 2012

setenv GMS_PATH /opt/gamess

setenv GMS_BUILD_DIR /opt/gamess

# machine type

setenv GMS_TARGET linux64

# FORTRAN compiler setup

setenv GMS_FORTRAN gfortran

setenv GMS_GFORTRAN_VERNO 4.6

# mathematical library setup

setenv GMS_MATHLIB acml

setenv GMS_MATHLIB_PATH /opt/acml/acml5.2.0//gfortran64_int64/lib

# parallel message passing model setup

setenv GMS_DDI_COMM mpi

setenv GMS_MPI_LIB openmpi

setenv GMS_MPI_PATH /opt/openmpi/1.6

Note that you can't change the gfortran version here either -- 4.7 won't be recognised.

Anyway, compilation will take a while -- enough for some coffee and reading.

In the next step you may have problems with openmpi --

lked looks in e.g. /opt/openmpi/1.6/lib64 but you'll probably only have /opt/openmpi/1.6/lib

Edit

lked and change

958 case openmpi:

959 set MPILIBS="-L$GMS_MPI_PATH/lib64"

960 set MPILIBS="$MPILIBS -lmpi"

961 breaksw

to

958 case openmpi:

959 set MPILIBS="-L$GMS_MPI_PATH/lib"

960 set MPILIBS="$MPILIBS -lmpi -lpthread"

961 breaksw

Generate the runtime file:

./lked gamess 12r1 >& lked.log

Done!

To compile with openblas:

1. edit install.info

#!/bin/csh

# compilation configuration for GAMESS

# generated on tantalum

# generated at Friday 21 September 14:01:54 EST 2012

setenv GMS_PATH /opt/gamess

setenv GMS_BUILD_DIR /opt/gamess

# machine type

setenv GMS_TARGET linux64

# FORTRAN compiler setup

setenv GMS_FORTRAN gfortran

setenv GMS_GFORTRAN_VERNO 4.6

# mathematical library setup

setenv GMS_MATHLIB openblas

setenv GMS_MATHLIB_PATH /opt/openblas/lib

# parallel message passing model setup

setenv GMS_DDI_COMM mpi

setenv GMS_MPI_LIB openmpi

setenv GMS_MPI_PATH /opt/openmpi/1.6

2. edit lked

Add lines 462-466 which sets up the openblas switch.

453 endif

454 set BLAS=' '

455 breaksw

456

457 case acml:

458 # do a static link so that only compile node needs to install ACML

459 set MATHLIBS="$GMS_MATHLIB_PATH/libacml.a"

460 set BLAS=' '

461 breaksw

462 case openblas:

463 # do a static link so that only compile node needs to install openblas

464 set MATHLIBS="$GMS_MATHLIB_PATH/libopenblas.a"

465 set BLAS=' '

466 breaksw

467

468 case none:

469 default:

470 echo "Warning. No math library was found, you should install one."

471 echo " MP2 calculations speed up about 2x with a math library."

472 echo "CCSD(T) calculations speed up about 5x with a math library."

473 set BLAS='blas.o'

474 set MATHLIBS=' '

475 breaksw

3. Link

./lked gamess 12r2 >& lked.log

You now have gamess.12r1.x which uses ATLAS, and gamess.12r2.x which uses openblas.

To run:

The rungms file was a bit too 'clever' for me, so I boiled it down to a file called gmrun which made executable (chmod +X gmrun) and put in /opt/gamess:

#!/bin/csh

set TARGET=mpi

set SCR=$HOME/scratch

set USERSCR=/scratch

set GMSPATH=/opt/gamess

set JOB=$1

set VERNO=$2

set NCPUS=$3

if ( $JOB:r.inp == $JOB ) set JOB=$JOB:r

echo "Copying input file $JOB.inp to your run's scratch directory..."

cp $JOB.inp $SCR/$JOB.F05

setenv TRAJECT $USERSCR/$JOB.trj

setenv RESTART $USERSCR/$JOB.rst

setenv INPUT $SCR/$JOB.F05

setenv PUNCH $USERSCR/$JOB.dat

if ( -e $TRAJECT ) rm $TRAJECT

if ( -e $PUNCH ) rm $PUNCH

if ( -e $RESTART ) rm $RESTART

source $GMSPATH/gms-files.csh

setenv LD_LIBRARY_PATH /opt/openmpi/1.6/lib:$LD_LIBRARY_PATH

set path= ( /opt/openmpi/1.6/bin $path )

/opt/openmpi/1.6/bin/mpiexec -n $NCPUS $GMSPATH/gamess.$VERNO.x|tee $JOB.out

cp $PUNCH .

Note that I actually do have two scratch directories -- one ~/scratch and one in /scratch. Note that the SCR directory should be local to the node as well as spacious, while USERSCR can be a networked,smaller directory.

Finally do

echo 'export PATH=$PATH:/opt/gamess' >> ~/.bashrc

Anyway.

Navigate to your tests/standard folder where there's a lot of exam*.inp files and do

gmrun exam12 12r1 4

where exam01 (or exam01.inp) is the name of the input file, 12r1 is the version number (that you set above) and 4 is the number of processors/threads .

---------------------

ELECTROSTATIC MOMENTS

---------------------

POINT 1 X Y Z (BOHR) CHARGE

0.000000 -0.000000 0.000000 -0.00 (A.U.)

DX DY DZ /D/ (DEBYE)

-0.000000 0.000000 -0.000000 0.000000

...... END OF PROPERTY EVALUATION ......

CPU 0: STEP CPU TIME= 0.02 TOTAL CPU TIME= 2.2 ( 0.0 MIN)

TOTAL WALL CLOCK TIME= 2.3 SECONDS, CPU UTILIZATION IS 97.78%

$VIB

IVIB= 0 IATOM= 0 ICOORD= 0 E= -76.5841347569

-6.175208802E-40-6.175208802E-40-4.411868660E-07 6.175208802E-40 6.175208802E-40

4.411868660E-07-1.441225933E-40-1.441225933E-40 1.672333111E-06 1.441225933E-40

1.441225933E-40-1.672333111E-06

-4.053383177E-34 4.053383177E-34-2.257541709E-15

......END OF GEOMETRY SEARCH......

CPU 0: STEP CPU TIME= 0.00 TOTAL CPU TIME= 2.2 ( 0.0 MIN)

TOTAL WALL CLOCK TIME= 2.3 SECONDS, CPU UTILIZATION IS 97.35%

990473 WORDS OF DYNAMIC MEMORY USED

EXECUTION OF GAMESS TERMINATED NORMALLY Fri Sep 21 14:27:17 2012

DDI: 263624 bytes (0.3 MB / 0 MWords) used by master data server.

----------------------------------------

CPU timing information for all processes

========================================

0: 2.160 + 0.44 = 2.204

1: 2.220 + 0.20 = 2.240

2: 2.212 + 0.32 = 2.244

3: 4.240 + 0.04 = 4.244

4: 4.260 + 0.00 = 4.260

5: 4.256 + 0.08 = 4.264

----------------------------------------

Done!

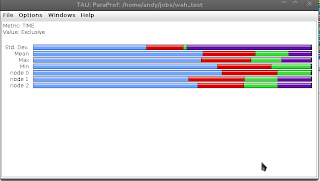

Looking at another test case (acetate w/ cosmo) I get the following scaling on a single node as a function of processors:

shmmax issue:

Anyone who has been using nwchem will be familiar with this

INPUT CARD> $END

DDI Process 0: shmget returned an error.

Error EINVAL: Attempting to create 160525768 bytes of shared memory.

Check system limits on the size of SysV shared memory segments.

The file ~/gamess/ddi/readme.ddi contains information on how to display

the current SystemV memory settings, and how to increase their sizes.

Increasing the setting requires the root password, and usually a sytem reboot.

DDI Process 0: error code 911

The fix is the same. First do

cat /proc/sys/kernel/shmmax

and look at the value. Then set it to the desired value according to this post:

http://verahill.blogspot.com.au/2012/04/solution-to-nwchem-shmmax-too-small.html

e.g.

sudo sysctl -w kernel.shmmax=6269961216

gfortran version issue:

Even though you likely have version 4.7.x of gfortran, pick 4.6 or you will get:

Please type 'gfortran -dumpversion' or else 'gfortran -v' to

detect the version number of your gfortran.

This reply should be a string with at least two decimal points,

such as 4.1.2 or 4.6.1, or maybe even 4.4.2-12.

The reply may be labeled as a 'gcc' version,

but it is really your gfortran version.

Please enter only the first decimal place, such as 4.1 or 4.6:

4.7

The gfortran version number is not recognized.

It should only have one decimal place, such as 4.x

The reason is this (code from config):

switch ($GMS_GFORTRAN_VERNO)

case 4.1:

case 4.2:

case 4.3:

case 4.4:

case 4.5:

echo " Alas, your version of gfortran does not support REAL*16,"

echo " so relativistic integrals cannot use quadruple precision."

echo " Other than this, everything will work properly."

breaksw

case 4.6:

echo " Good, the newest gfortran can compile REAL*16 data type."

breaksw

default:

echo "The gfortran version number is not recognized."

echo "It should only have one decimal place, such as 4.x"

exit 4

breaksw

endsw