You can download without registering, but please do register as the number of registered users tend to be important for funding and evaluation of software development in academia: http://www.cs.uoregon.edu/Research/tau/downloads.php

I'm not really sure about how to use PDT, and I've used Tau without it before without any problems.

The compilation order below is also important -- pdt won't build without libpdb.a which is generated by tau -- but you can't configure tau with -pdt if it doesn't exist.

Compiling

sudo mkdir /opt/tau sudo chown $USER /opt/tau cd /opt/tau

wget http://tu-dresden.de/die_tu_dresden/zentrale_einrichtungen/zih/forschung/software_werkzeuge_zur_unterstuetzung_von_programmierung_und_optimierung/otf/dateien/OTF-1.12.2salmon.tar.gz tar xvf OTF-1.12.2salmon.tar.gz cd /OTF-1.12.2salmon/ ./configure --prefix=/opt/tau/OTF make make install cd ../

wget http://tau.uoregon.edu/tau.tgz tar xvf tau.tgz cd tau-2.22-p1/ ./configure -mpilib=/usr/lib/openmpi/lib -prefix=/opt/tau -openmp -TRACE -iowrapper -otf=/opt/tau/OTF -pthread make install cd ../

wget http://tau.uoregon.edu/pdt.tar.gz tar xvf pdt.tar.gz cd pdtoolkit-3.18.1/ ./configure -prefix=/opt/tau/pdt make make install

cd ../tau-2.22-p1/ ./configure -mpilib=/usr/lib/openmpi/lib -prefix=/opt/tau -openmp -TRACE -iowrapper -pthread -otf=/opt/tau/OTF -pdt=/opt/tau/pdt make install

Testing

Time to try it out on something parallel.

First set the path

PATH=$PATH:/opt/tau/x86_64/bin

I used nwchem with this input file, co2.nw:

title "co nmr" geometry c 0 0 0 o 0 0 1.13 end basis * library "6-311+G*" end property shielding end dft direct grid fine mult 1 xc HFexch 0.05 slater 0.95 becke88 nonlocal 0.72 vwn_5 1 perdew91 0.81 end task dft property

and ran it using

mpirun -n 3 tau_exec nwchem co2.nw

which ends with

It's obviously a bit too short, but will do for illustration purposes.Total times cpu: 4.8s wall: 7.6s

That generates a set of files, profile.*.0.0 -- one for each thread i.e. profile.1.0.0, profile.2.0.0 and profile.3.0.0 in this particular case. There are a lot of options for tracing, using hardware counters etc. -- see http://www.cs.uoregon.edu/Research/tau/docs/newguide/

pprof -sReading Profile files in profile.* FUNCTION SUMMARY (total): --------------------------------------------------------------------------------------- %Time Exclusive Inclusive #Call #Subrs Inclusive Name msec total msec usec/call --------------------------------------------------------------------------------------- 100.0 15,813 25,931 3 14276 8643959 .TAU application 18.8 4,870 4,870 10272 0 474 MPI_Barrier() 12.1 3,138 3,138 3 0 1046279 MPI_Init() 8.1 2,090 2,090 818 0 2556 MPI_Recv() 0.0 9 9 3 0 3173 MPI_Finalize() 0.0 3 3 24 0 128 MPI_Bcast() 0.0 2 2 6 0 463 MPI_Comm_dup() 0.0 1 1 790 0 2 MPI_Comm_size() 0.0 0.872 0.872 818 0 1 MPI_Send() 0.0 0.294 0.294 841 0 0 MPI_Comm_rank() 0.0 0.17 0.17 674 0 0 MPI_Get_count() 0.0 0.111 0.111 3 0 37 MPI_Comm_free() 0.0 0.026 0.026 3 0 9 MPI_Errhandler_set() 0.0 0.024 0.024 6 0 4 MPI_Group_rank() 0.0 0.02 0.02 6 0 3 MPI_Comm_compare() 0.0 0.015 0.015 4 0 4 MPI_Comm_group() 0.0 0.008 0.008 4 0 2 MPI_Group_size() 0.0 0.004 0.004 1 0 4 MPI_Group_translate_ranks() FUNCTION SUMMARY (mean): --------------------------------------------------------------------------------------- %Time Exclusive Inclusive #Call #Subrs Inclusive Name msec total msec usec/call --------------------------------------------------------------------------------------- 100.0 5,271 8,643 1 4758.67 8643959 .TAU application 18.8 1,623 1,623 3424 0 474 MPI_Barrier() 12.1 1,046 1,046 1 0 1046279 MPI_Init() 8.1 696 696 272.667 0 2556 MPI_Recv() 0.0 3 3 1 0 3173 MPI_Finalize() 0.0 1 1 8 0 128 MPI_Bcast() 0.0 0.926 0.926 2 0 463 MPI_Comm_dup() 0.0 0.436 0.436 263.333 0 2 MPI_Comm_size() 0.0 0.291 0.291 272.667 0 1 MPI_Send() 0.0 0.098 0.098 280.333 0 0 MPI_Comm_rank() 0.0 0.0567 0.0567 224.667 0 0 MPI_Get_count() 0.0 0.037 0.037 1 0 37 MPI_Comm_free() 0.0 0.00867 0.00867 1 0 9 MPI_Errhandler_set() 0.0 0.008 0.008 2 0 4 MPI_Group_rank() 0.0 0.00667 0.00667 2 0 3 MPI_Comm_compare() 0.0 0.005 0.005 1.33333 0 4 MPI_Comm_group() 0.0 0.00267 0.00267 1.33333 0 2 MPI_Group_size() 0.0 0.00133 0.00133 0.333333 0 4 MPI_Group_translate_ranks()

...which I can't pretend to understand. Reasonably, the first line would be the cpu time and the wall time (4.8 and 7.6 s vs 5,271 and 8,643 ms).

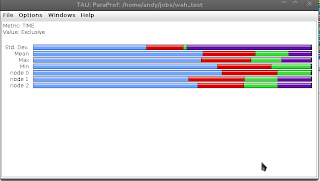

A visual representation can be had by launching paraprof:

paraprof

Now it's time to explore...

The one thing that doesn't seem to work is visualisation of the communication matrix...

Failed attempt to build with vampirtrace

sudo mkdir /opt/tau sudo chown $USER /opt/tau cd /opt/tau

wget http://tu-dresden.de/die_tu_dresden/zentrale_einrichtungen/zih/forschung/software_werkzeuge_zur_unterstuetzung_von_programmierung_und_optimierung/otf/dateien/OTF-1.12.2salmon.tar.gz tar xvf OTF-1.12.2salmon.tar.gz cd /OTF-1.12.2salmon/ ./configure --prefix=/opt/tau/OTF make make install cd ../

wget http://tu-dresden.de/die_tu_dresden/zentrale_einrichtungen/zih/forschung/software_werkzeuge_zur_unterstuetzung_von_programmierung_und_optimierung/vampirtrace/dateien/VampirTrace-5.14.1.tar.gz tar xvf VampirTrace-5.14.1.tar.gz cd VampirTrace-5.14.1/ ./configure --prefix=/opt/tau/vampirtrace --with-mpi-dir=/usr/lib/openmpi/lib --with-extern-otf-dir=/opt/tau/OTF make make install

wget http://tau.uoregon.edu/tau.tgz tar xvf tau.tgz cd tau-2.22-p1/ ./configure -mpilib=/usr/lib/openmpi/lib -prefix=/opt/tau -openmp -TRACE -iowrapper -otf=/opt/tau/OTF -vampirtrace=/opt/tau/vampirtrace make install

It builds fine, but during execution of mpirun -n 2 tau_exec... I get

Error: No matching binding for 'mpi' in directory /opt/tau/x86_64/lib Available bindings (/opt/tau/x86_64/lib): Error: No matching binding for 'mpi' in directory /opt/tau/x86_64/lib Available bindings (/opt/tau/x86_64/lib): /opt/tau/x86_64/lib/shared-disable /opt/tau/x86_64/lib/shared-disable